GRAVY PRODUCT INSIGHTS SERIES

Why Data Normalization and Quality Assurance Improves Analysis and Insights

April 28, 2022

When evaluating different data sources, it can be tempting to buy the biggest data set at the lowest cost. In the location data world, this typically means raw mobile location data that has not been verified or normalized to account for periodic supply fluctuations. Cheap location data inevitably comes at a higher price tag than most companies expect, including the following hidden costs:

- Additional resources to process, normalize, and prep the data for analysis

- Removal of up to 45% of the raw data that is identified as redundant or erroneous

- Extra fees to store that unusable data

- And most importantly, the loss of trust in the accuracy of the insights that result from analysis of low-quality data

That last point is key because if you can’t trust the data, then the analysis is invalid and the entire value of your data purchase is lost. There are two key components to trustworthy data: quality assurance and normalization.

Quality Assurance

Each location data provider has their own set of measures to provide some level of quality to their data customers. Gravy’s rigorous processing methods for quality involve using our proprietary AdmitOne™ processing engine and Location Data Forensics to filter and categorize location signals, ultimately verifying consumer visits to millions of places and events. That covers quality, but why is it so important that the data also be normalized?

Data Normalization

Every data provider experiences fluctuations in their overall supply. This could be due to compliance regulations that affect data supply market-wide, a provider adding or removing suppliers, and a number of other factors. If a provider does not normalize their data to account for those changes, then their data supply will skew the results of your analysis. Even if a data set is of the highest quality, but not normalized, it cannot be fully trusted by analysts.

Our Approach to Normalization

Gravy has taken a thoughtful approach to normalizing our Visitations, which involves applying multiple adjustment factors to the data. These factors help to smooth out outliers in the data, adjust for estimates of mobile device visits not fully verified by Gravy’s system, and provide analysts with a clearer view of foot traffic trends over time. Analysts can trust this adjusted data to account for fluctuations in our supply and present a cleaner view of foot traffic trends over time. To illustrate the importance of data normalization and quality assurance, let’s take a look at a real-world example using Gravy Visitations.

Real-World Example: The Impact of Normalization on Gravy Visitations

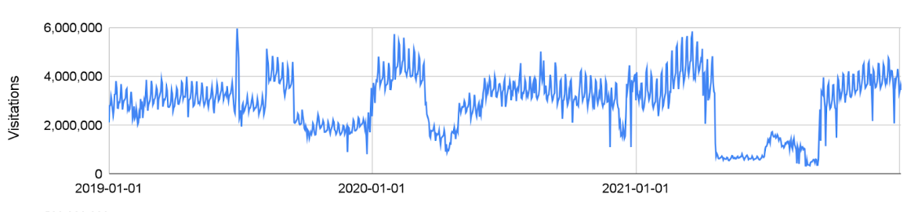

This chart shows how Gravy Visitations trend over time without our data normalization and data estimation methodology applied. You can see dramatic swings in visit patterns to this sample location at various times through the 3-year period, most notably large dips in visits in 2021.

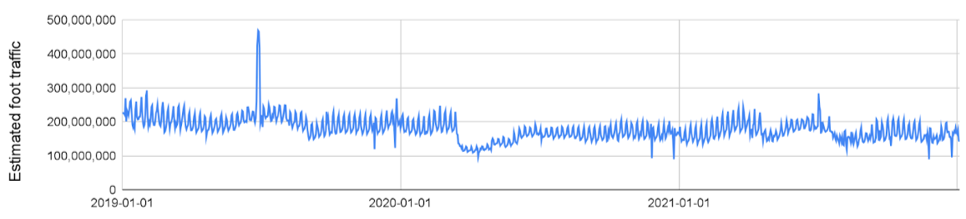

When the data is normalized, you see a smoothing of those trends (as shown in the chart above). Without normalization, a market-wide change in the overall supply of mobile location signals with new privacy legislation in the Spring of 2021 would have completely skewed foot traffic insights from Visitations during that time.

For more information on Gravy Visitations and how it provides insights you can trust, speak with a location intelligence expert from Gravy Analytics today.